Performance Hit When Using Csvserde On Conventional Csv Data

tl dr Using CSVSerde for conventional CSV files is about 3X slower…

The following code shows timings encountered when processing a simple pipe-delimited csv file. One Hive table definition uses conventional delimiter processing, and one uses CSVSerde.

The input timings were on a small cluster . The file used for testing had 62,825,000 rows. Again, rather small.

Table DDL using conventional delimiter definition:

CREATE external TABLE test_csv_serde ROW FORMAT DELIMITED FIELDS TERMINATED BY '|'location '/user/< uname> /elt/test_csvserde/' -- Load the data one-timeinsert overwrite table test_csv_serdeselect * from < large table>

Table DDL using CSVSerde :

CREATE external TABLE test_csv_serde_using_CSV_Serde_reader ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde' WITH SERDEPROPERTIES location '/user/< uname> /elt/test_csvserde/'

Results:

hive> select count from test_csv_serde Time taken: 8.683 seconds, Fetched: 1 rowhive> select count from test_csv_serde_using_CSV_Serde_reader Time taken: 27.442 seconds, Fetched: 1 rowhive> select count from test_csv_serde Time taken: 8.707 seconds, Fetched: 1 rowhive> select count from test_csv_serde_using_CSV_Serde_reader Time taken: 27.41 seconds, Fetched: 1 rowhive> select min from test_csv_serde Time taken: 10.267 seconds, Fetched: 1 rowhive> select min from test_csv_serde_using_CSV_Serde_reader Time taken: 29.271 seconds, Fetched: 1 row

Why Does All Columns Get Created As String When I Use Opencsvserde In Hive

I am trying to create a table using the OpenCSVSerde and some integer and date columns. But the columns get converted to String. Is this an expected outcome?As a workaround, I do an explicit type-cast after this step

hive> create external table if not exists response ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde' WITH SERDEPROPERTIES location '/prod/hive/db/response' TBLPROPERTIES OKTime taken: 0.396 secondshive> describe formatted response OK# col_name data_type commentresponse_id string from deserializerlead_id string from deserializercreat_date string from deserializer

that explains change of datatype to String.

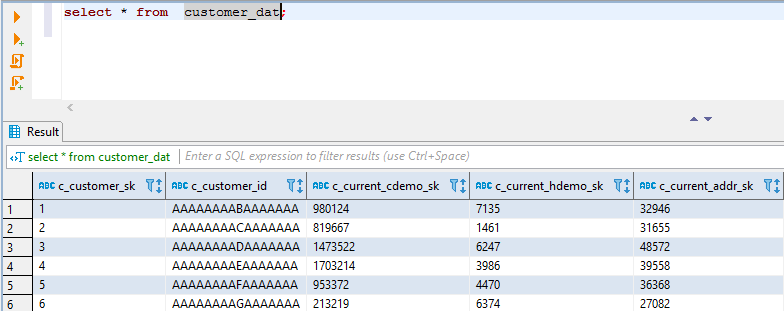

Processing Oss Data Files In Different Formats Using Data Lake Analytics

Alibaba Cloud Data Lake Analytics is a serverless interactive query and analysis service in Alibaba Cloud. You can query and analyze data stored in Object Storage Service and Table Store instances simply by running standard SQL statements, without the necessity of moving the data.

Currently, DLA has been officially launched on Alibaba Cloud. You can apply for a trial of this out-of-the-box data analysis service.

Visit the official documentation page to apply for activation of the DLA service.

In addition to plain text files such as CSV and TSV files, DLA can also query and analyze data files in other formats, such as ORC, Parquet, JSON, RCFile, and Avro. DLA can even query geographical JSON data in line with the ESRI standard and files matching the specified regular expressions.

This article describes how to use DLA to analyze files stored in OSS instances based on the file format. DLA provides various built-in Serializers/Deserializers for file processing. Instead of compiling programs by yourself, you can choose one or more SerDes to match formats of data files in your OSS instances. Contact us if the SerDes do not meet your needs for processing special file formats.

Recommended Reading: What Treatment Is Used For Hiv

Questions : Csv Serde Format In Hive For Different Value Types In Table

A CSV file contain survey of user in below anycodings_hive-serde messy format and contain many different data anycodings_hive-serde types as string, int, range.

China, 20-30, Male, xxxxx, yyyyy, Mobile anycodings_hive-serde Developer zzzz-vvvv “$40,000-50,000”, anycodings_hive-serde Consulting

Japan, 30-40, Female, xxxxx, , Software anycodings_hive-serde Developer, zzzz-vvvv “$40,000-50,000”, anycodings_hive-serde Development

. . . . .

The below code is used to convert the CSV anycodings_hive-serde file to a Hive table with each column anycodings_hive-serde assigned their respective values correctly.

add jar /home/cloudera/Desktop/project/csv-serde-1.1.2.jar drop table if exists 2016table create external table 2016table ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde' WITH SERDEPROPERTIES STORED AS TEXTFILE LOAD DATA LOCAL INPATH "/home/cloudera/survey/2016edited.csv" INTO TABLE 2016table

This code worked fine and each column got anycodings_hive-serde allocated separately with their values. All anycodings_hive-serde Select Queries give true result.

Now when trying to create another anycodings_hive-serde table from the upper anycodings_hive-serde table with less coulmns the anycodings_hive-serde values are getting mixed in different anycodings_hive-serde columns.

Code used for that

But this code mess up values.SELECT gender1 anycodings_hive-serde from 2016sort give mix values of gender anycodings_hive-serde column along with values of other column.

Empty Fields And Null Values

When LazySimpleSerDe and OpenCSVSerDe reads an empty field they interpret it differently depending on the type of the column. When the corresponding column is typed as string both will interpret an empty field as an empty string. For other data types LazySimpleSerDe will interpret the value as NULL, but OpenCSVSerDe will throw an error:

HIVE_BAD_DATA: Error parsing field value â’ for field 1: For input string: “”

LazySimpleSerDe will by default interpret the string \N as NULL, but can be configured to accept other strings instead with NULL DEFINED AS ‘-‘ or the property serialization.null.format.

Also Check: What Cd4 Count Is Aids

Hive Opencsvserde Changes Your Table Definition

This thing with an ugly name is described in the Hive documentation. Its behaviour is described accurately, but that is no excuse for the vandalism that this thing inflicts on data quality.

When you define a table you specify a data-type for every column. If you use the “OpenCSVSerde” it changes the definition silently so that every column is defined as a string.

The only commands that should change a table definition are create and alter. The behaviour of the OpenCSVSerde is in line with the cavalier attitude to data quality and error handling that is rife in Hive. This “feature” caused us to waste a lot of time in a project I was working on.

This is how it works. You define a table as you want it to be, and specify that you want to read the data from a csv file, like this:

create external table using_open_csv_serde , start_date date, paid_timestamp timestamp, amount decimal, approved boolean, comment varchar ) row format serde 'org.apache.hadoop.hive.serde2.OpenCSVSerde' with serdeproperties location '/user/ron/hive_load_failure/'

I think they are trying to wind me up by forcing me to use the ridiculous abbreviation “serde” four times in one statement, but this irritation is nothing compared to the way I feel about having my table definition silently changed. This is the resulting table.

Text Data Type Column Not Loading Data For The Values With Hyphen And Special Charcter

Hi ,

I have an external table with col1 data type text , when i load the table with the scriptcreate external table boa_external.testROW FORMAT SERDE’org.apache.hadoop.hive.serde2.OpenCSVSerde’WITH SERDEPROPERTIES STORED AS INPUTFORMAT’org.apache.hadoop.mapred.TextInputFormat’OUTPUTFORMAT’org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat’LOCATION’s3://’TABLE PROPERTIES

would appreiciate if you could throw some light on it and would be of great help

Can you please confirm where are you trying to create the table ? If you are trying to create table in Athena with text datatype, Athena doesn’t support text data type. For list of supported data types in Athena, please refer . I tested it on my end and received below error

FAILED: ParseException line 1:34 cannot recognize input near ‘text”)”ROW’ in column typeThis query ran against the “default” database, unless qualified by the query. Please post the error message on our forum or contact customer support

From the error it looks like the table DDL is unable to handle the data with special characters. If you are creating table with AWS Data Exchange, I would recommend please open a support ticket with our AWS Data Exchange queue and please provide some sample data for us to test and dive deep into the issue

REFERENCES:

Read Also: Does Charlie Sheen Have Hiv

Show The Create Table Statement

Issue a SHOW CREATE TABLE < tablename> command on your Hive command line to see the statement that created the table.

hive> SHOW CREATE TABLE wikicc OKCREATE TABLE `wikicc`ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat'OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'LOCATION '< path-to-table> 'TBLPROPERTIES

To Use A Serde In Queries

To use a SerDe when creating a table in Athena, use one of the following methods:

-

Specify ROW FORMAT DELIMITED and then use DDL statements to specify field delimiters, as in the following example. When you specifyROW FORMAT DELIMITED, Athena uses the LazySimpleSerDe by default.

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','ESCAPED BY '\\'COLLECTION ITEMS TERMINATED BY '|'MAP KEYS TERMINATED BY ':'

For examples of ROW FORMAT DELIMITED, see the following topics:

Don’t Miss: Can You Drink On Hiv Meds

Csv Serde Format In Hive For Different Value Types In Table

A CSV file contain survey of user in below messy format and contain many different data types as string, int, range.

China, 20-30, Male, xxxxx, yyyyy, Mobile Developer zzzz-vvvv “$40,000-50,000”, Consulting

Japan, 30-40, Female, xxxxx, , Software Developer, zzzz-vvvv “$40,000-50,000”, Development

. . . . .

The below code is used to convert the CSV file to a Hive table with each column assigned their respective values correctly.

add jar /home/cloudera/Desktop/project/csv-serde-1.1.2.jar drop table if exists 2016table create external table 2016table ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde' WITH SERDEPROPERTIES STORED AS TEXTFILE LOAD DATA LOCAL INPATH "/home/cloudera/survey/2016edited.csv" INTO TABLE 2016table

This code worked fine and each column got allocated separately with their values. All Select Queries give true result.

Now when trying to create another table from the upper table with less coulmns the values are getting mixed in different columns.

Code used for that

DROP TABLE IF EXISTS 2016sort CREATE EXTERNAL TABLE 2016sort ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde' WITH SERDEPROPERTIES STORED AS TEXTFILE insert into table 2016sort select country,age,gender,occupation,salary from 2016table

But this code mess up values.SELECT gender1 from 2016sort give mix values of gender column along with values of other column.

Can anyone help me figure out what is missing !

Which One To Use

In almost all cases the choice between LazySimpleSerDe and OpenCSVSerDe comes down to whether or not you have quoted fields. If you do, there is only one answer, OpenCSVSerDe. If you donât have quoted fields, I think itâs best to follow the advice of the official Athena documentation and use the default, LazySimpleSerDe.

Anecdotally, and from some very unscientific testing, LazySimpleSerDe seems to be the faster of the two. This may or may not be due to the difference between how the two serdes parse the field values into the data types of the corresponding columns. I understand it, when using LazySimpleSerDe only columns used in the query are fully parsed.

Recommended Reading: How Common Is Hiv In The Us

Opencsvserde For Processing Csv

When you create an Athena table for CSV data, determine the SerDe to use based on the types of values your data contains:

-

If your data contains values enclosed in double quotes , you can use the OpenCSV SerDe to deserialize the values in Athena. If your data does not contain values enclosed in double quotes , you can omit specifying any SerDe. In this case, Athena uses the default LazySimpleSerDe. For information, see LazySimpleSerDe for CSV, TSV, and custom-delimited files.

-

If your data has UNIX numeric TIMESTAMP values , use the OpenCSVSerDe. If your data uses thejava.sql.Timestamp format, use the LazySimpleSerDe.

Comma In Between Data Of Csv Mapped To External Table In Hive

I am getting a huge csv ingested in to nifi to process to a location.

The location is an external table location, from there data is processed in to orc tables.

I am getting comma in between data of csv, can you please help me to handle it.

file:

a, quick, brown,fox jumps, over, the, lazy

When an external table is placed on the file only “a, quick, brown, fox, jumps” are shown

Thank you

Use csv serde to escape quote characters in csv file,

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde'WITH SERDEPROPERTIES

Example:-

a, quick,"brown,fox jumps",over,"the, lazy"

Crete table statement:-

create table hccROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde'WITH SERDEPROPERTIES

Select the data in table

hive> select * from hcc +---------+---------+------------------+---------+------------+--+| field1 | field2 | field3 | field4 | field5 |+---------+---------+------------------+---------+------------+--+| a | quick | brown,fox jumps | over | the, lazy |+---------+---------+------------------+---------+------------+--+1 row selected

So in our create table statement we have mentioned quote character as ” and seperator as ,. When we query table hive considers all the data enclosing quotes as one filed.

Read Also: Can You Get Hiv From An Undetectable Person

Issue A Createexternaltable Statement

If the statement that is returned uses a CREATETABLE command, copy the statement and replace CREATETABLE with CREATEEXTERNALTABLE.

-

EXTERNAL ensures that Spark SQL does not delete your data if you drop the table.

-

You can omit the TBLPROPERTIES field.

DROPTABLEwikicc

CREATEEXTERNALTABLE`wikicc`ROWFORMATSERDE'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'STOREDASINPUTFORMAT'org.apache.hadoop.mapred.TextInputFormat'OUTPUTFORMAT'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'LOCATION'< path-to-table> '

Storage Format And Serde

After creating a table using DLA, run the SHOW CREATE TABLE statement to query the full table creation statement.

CREATE EXTERNAL TABLE nation (

+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+1 row in set

If you compare the table creation statement with the table details, and you will notice that STORED AS TEXTFILE in the table creation statement has been replaced with ROW FORMAT SERDE STORED AS INPUTFORMAT OUTPUTFORMAT.

DLA uses INPUTFORMAT to read the data files stored in the OSS instance and uses SERDE to resolve the table records from the files.

The following table lists the file formats that DLA supports currently. To create a table for a file of any of the following formats, simply run STORED AS. DLA selects suitable SERDE, INPUTFORMAT, and OUTPUTFORMAT.

The following section describes some examples.

Recommended Reading: How Long Will You Live With Hiv

Column Names And Order

The columns of the table must be defined in the same order as they appear in the files. Both CSV serdes read each line and map the fields of a record to table columns in sequential order. If a line has more fields than there are columns, the extra columns are skipped, and if there are fewer fields the remaining columns are filled with NULL.

You might think that if the data has a header the serde could use it to map the fields to columns by name instead of sequence, but this is is not supported by either serde. On the other hand, this means that the names of the columns are not constrained by the file header and you are free to call the columns of the table what you want.

Given the above you may have gathered that itâs possible to evolve the schema of a CSV table, within some constraints. Itâs possible to add columns, as long as they are added last, and removing the last columns also works â but you can only do either or, and adding and removing columns at the start or in the middle also does not work.

In practice this means that if you at some point realize you need more columns you can add these, but you should avoid all other schema evolution. For example, if you at some point removed a column from the table, you canât later add columns without rewriting the old files that had the old column data.

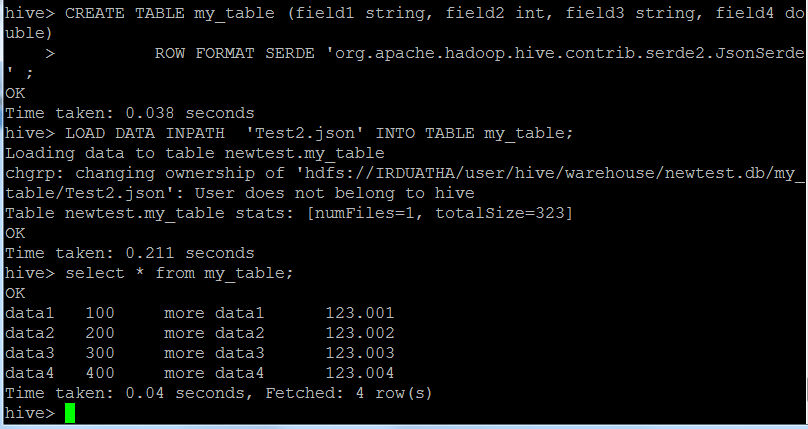

Hive 211 Table Creation Csv

So I did all the research and couldn’t see the same issue anywhere in HIVE.

Followed the link below and I have no issues with data in quotes..

My external table creation has the below serde properties,but for some reason,the default escapeChar is being replaced by quoteChar which is doublequotes for my data.

CREATE EXTERNAL TABLE IF NOT EXISTS people_fullROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde' WITH SERDEPROPERTIES STORED AS TEXTFILE

DATA ISSUE :

Sample HDFS Source data : “\”Robs business Target HIVE Output : “””Robs business

So the three double quotes as seen in “””Robs business after the replacement is causing the data unwanted data delimitation may be as HIVE cannot handle three double quotes inside data is also my default quote character)?

Why is this happening and is there a solution ? Please help.Many thanks.

Best,Asha

Don’t Miss: Can Hiv Virus Be Transmitted Through Saliva

Using Csv Serde With Hive Create Table Converts All Field Types To String

If I create a table and specify a CSVSerde then all fields are being converted to string type.

hive> create table foo row format serde ‘com.bizo.hive.serde.csv.CSVSerde’ stored as textfile OKTime taken: 0.22 secondshive> describe foo OKa string from deserializerb string from deserializerc string from deserializerTime taken: 0.063 seconds, Fetched: 3 rowThat Serde is from

If I try the serde ‘org.apache.hadoop.hive.serde2.OpenCSVSerde’ from this page I saw the same thing. All fields are being changed to type string.

Hive version 1.2.1Hadoop version 2.7.0java version “1.7.0_80”

Yes the com.bizo.hive.serde.csv.CSVSerde only creates strings. This is how it was built and how it will always work. There is no option to change it. I think it is likely that this would work for the majority of your variables. That being said I would.

Use A SELECT statement using a regex-based column specification, which can be used in Hive releases prior to 0.13.0, or in 0.13.0 and later releases if the configuration property hive.support.quoted.identifiers is set to none. This means you can quickly build a new table altering the types of the few variables you need to have as doubles or ints.

When To Use Csvserde:

For example: If we have a text file with the following data:

col1 col2 col3----------------------121 Hello World 4567232 Text 5678343 More Text 6789

Pipe delimited it would look like:

121|Hello World|4567|232|Text|5678|343|More Text|6789|

but Blank delimited with quoted text would look like :

121 'Hello World' 4567232 Text 5678343 'More Text' 6789

Notice that the text may or may not have quote marks around it – text only needs to be quoted if it contains a blank. This is a particularly nasty set of data. You need custom coding – unless you use CSVSerde.

CSVSerde can handle this data with ease. Blank delimited/Quoted text files are parsed perfectly without any coding when you use the following table declaration: .

CREATE TABLE my_tableROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde'WITH SERDEPROPERTIES

Read Also: How Long Has Hiv Been Around

Hive 20+: Syntax Change

Hive 2.0+: New syntax

DESCRIBE table_name | | | )* ]

Warning: The new syntax could break current scripts.

- It no longer accepts DOT separated table_name and column_name. They would have to be SPACE-separated. DB and TABLENAME are DOT-separated. column_name can still contain DOTs for complex datatypes.

- Optional partition_spec has to appear after the table_name but prior to the optional column_name. In the previous syntax, column_name appears in between table_name and partition_spec.

Examples:

Files Matching Regular Expressions

Generally, files of this type are stored in the OSS instance in the plain text format. Each row indicates a record in the table and can match a regular expression.

For example, Apache WebServer log files are of this type.

The content of a log file is as follows:

127.0.0.1 - frank "GET /apache_pb.gif HTTP/1.0" 200 2326127.0.0.1 - - "GET /someurl/?track=Blabla HTTP/1.1" 200 5864 - "Mozilla/5.0 AppleWebKit/525.19 Chrome/1.0.154.65 Safari/525.19"

Each row of the file is expressed using the following regular expression and columns are separated with space:

)?

The table creation statement for the preceding file format is as follows:

CREATE EXTERNAL TABLE serde_regex(

Also Check: What Are The Chances Of Contracting Hiv